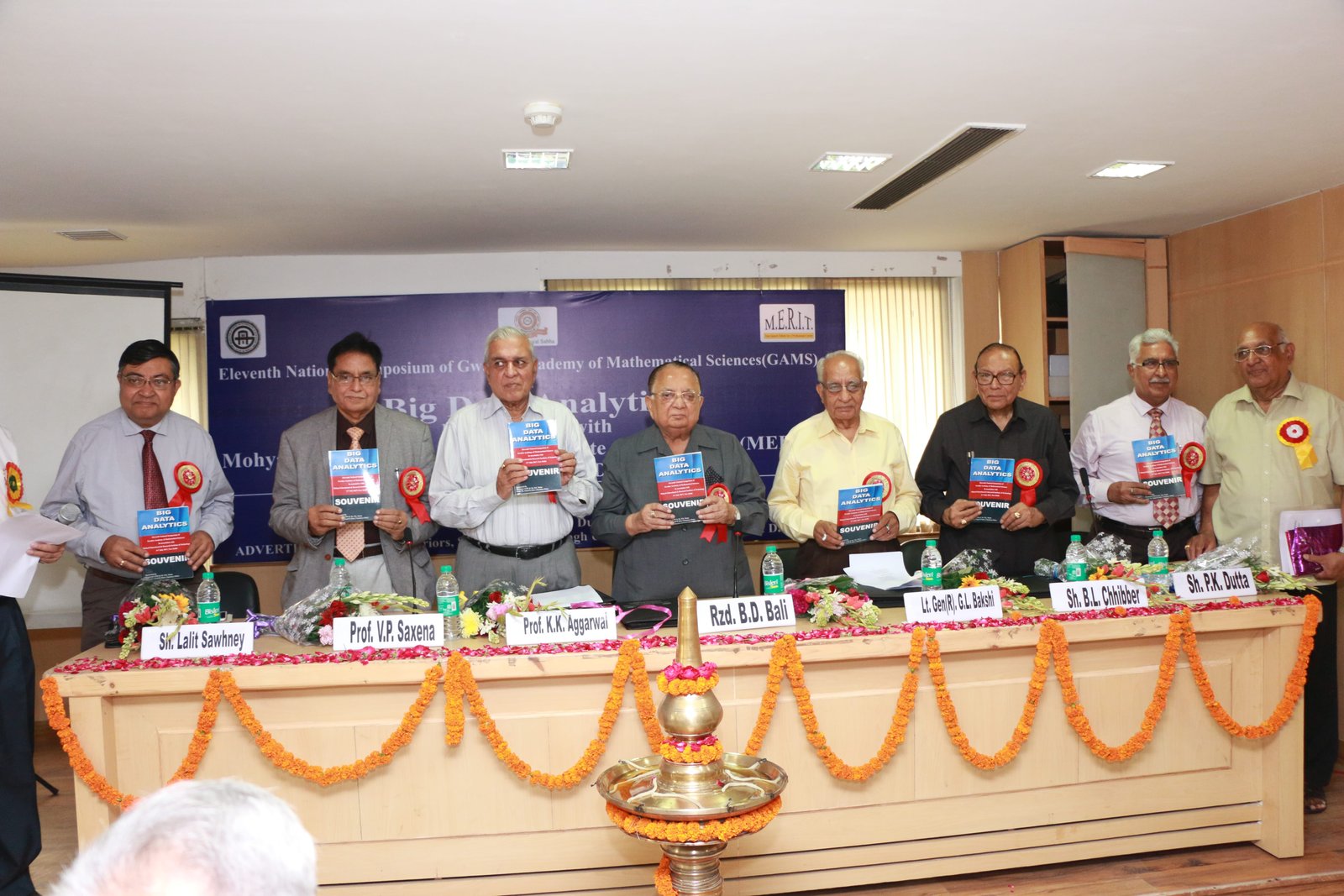

MERIT News

Cover Page for IGNOU Assignment

Cover Page for IGNOU Assignment

LMS Assignment Submission Process

LMS Assignment Submission Process

Assignment Submission Link - TEE JUNE 2024

Assignment Submission Link - TEE JUNE 2024

1.

BCA-ODL Schedule, Assignments and Study Material

1.

BCA-ODL Schedule, Assignments and Study Material

3.

MCA - OL Schedule, Assignments and Study Material

3.

MCA - OL Schedule, Assignments and Study Material

4.

MCA NEW - ODL Schedule, Assignments and Study Material

4.

MCA NEW - ODL Schedule, Assignments and Study Material

5.

PGDCA Schedule, Assignments and Study Material

5.

PGDCA Schedule, Assignments and Study Material

COPA

DATABASE SYSTEM ASSISTANT

ABOUT MERIT

READ MORE...

OFFICE

Notice Board

-

Faculty Required for MERIT

Qualification-: MCA, at least two years experience of teaching in a recognised institute after passing MCA.

please contact to:

rkd_nr@yahoo.com

01141325252